AI Change Management

Change Management is so critical, yet so underserved in this time of rapid AI adoption. It’s really fascinating, and concerning.

Over the last few months, I’ve researched dozens of AI projects, and I keep seeing the same pattern: many projects are failing not because of the technology, but because organizations aren’t applying tried-and-true change management best practices.

If you are reading this, I would like to offer some insights from my research to help you navigate this challenge, and in particular how change management applies to AI Implementation.

I have found thus far that these are the 10 most essential areas to focus on to ensure effective adoption:

- A Clear Vision

- Emphasize urgency

- Leadership commitment

- Listen and Involve People (especially those doing the work)

- Build a coalition

- Align objectives: AI solves their problems

- AI Education greatly reduces resistance

- Foster environments for safe experimentation

- Integrate rather than Disrupt (technically: micro services, parallel implementations)

- Integrate feedback loops (AI and people)

Let’s dive deeper into each of these including some important reference models for Change Management frameworks:

Vision

What is the vision you are trying to communicate to your team? Here are some examples that could work:

- Your Time, Your Choice “AI handles the repetitive stuff you hate doing anyway, so you can spend your time on the work that actually uses your brain and skills.”

- Stop Reinventing the Wheel “AI remembers the solutions we’ve already figured out, so you’re not starting from scratch every time or hunting down who knows what.”

- Do Your Best Work, Faster “AI takes care of the grunt work in your process, so you can focus on the creative and strategic parts where you actually add value—and get it done in less time.”

What you want to move away from are mandates:

- Everyone must use ChatGPT for at least 3 tasks per week

- All customer service responses must be drafted by AI first

- 20% of all tasks must be done by AI before the end of the year

- Use AI to write your reports going forward

- We’re replacing our documentation system with an AI chatbot

These kinds of mandates don’t communicate a purpose or reason behind change, and if you are not creating meaning for change, people will instinctively assume the worst possible motivations: to replace me, to increase profits at my expense, to diminish my value.

If this is your situation, or if a mandate was pass down to you from above, I think the first order of duty is to take that mandate and to shift it from a check-box into a vision for change, make it inspiring and rewarding for your team.

Emphasize Urgency

I don’t think this is too hard to do in the world of AI right now. I think the main emphasis here is to just remember that urgency can’t be assumed and still needs to be addressed. Whether this is from the mandate you got, you are giving or that is received: change needs some urgency attached to it to overcome the natural inertia that people and systems have that resists change. Urgency is what will apply the necessary force to start the big boulder moving, without it, excuses and “tomorrow”-procrastination will overcome your desire for change.

Leadership commitment

This extends from the vision. You don’t just want to talk the talk, but walk the walk. What are you then going to do to help achieve the goal? How does the goal apply to yourself? Can you set an example, how will you learn?

Leadership isn’t just about making decisions: it’s about being the advocate and champion for your team’s success. As Peter Drucker (The Effective Executive) would say: You’re in service to them– listening to their challenges, removing their roadblocks, and ensuring they have what they need to do their best work.

Listen to your team

And the best way to be in service of your team, is to listen to them. If they have reservations, document what they are and first try to understand what each person’s concerns are. If people in your organization have a build-up of “issues and concerns” that have been mounting, potentially unrelated to your AI transformation, all this build up of frustration, concerns and worries will get pushed over the edge and you may be left wondering why it is there are so many concerns and worries all of a sudden.

Staying in touch with your team is really important. AI can fulfill on this promise, if you use it to free up people’s time so they can address the problems they are having, but if all you are promising is for AI to give people more free time, but they still lack human connection and an ability to “get to zero” with you (the people whom can help them improve their work-lives), the AI-promise will be shallow and feel unfulfilling. And this applies to any change brought into place, not just AI-ones.

You want to be actively removing obstacles. In the 8-step methodology, John Kotter talks about listening as the tool to understand where the obstacles are, so you can overcome them. But if you’re not listening, you’ll never discover where the real obstacles lie.

You want to save people time, but the people doing the work will know best what is REALLY taking their time. Focus on first principles: are you sure AI is the fix to the problem your team is having? Maybe time management issues are being caused by other, less sexy-AI things and have immediate fixes that achieve the goal, in a fraction of the time, and AI never even comes into the equation. Perhaps just batching all the questions they get each day into a single list can already reduce their workload by 4 hours a week and all you did was address process.

Listen, think objectively, address real problems felt bottom-up, remember first principles.

Build a coalition

Change is more effective when you can build it with a strong coalition, identify early on who in your coalition will be:

- Your key influencers

- The expert

- And the person with power

You want this team to have credibility, whether that is external or internal to your organization, for people to trust in their guidance, but also of course to ensure your subsequent implementations and process changes are effective and meaningful.

Leadership could be your key influencer, but that person may instead be someone internal or external to your team or organization. This could even be your primary skeptic in the group, the people or persons who are the most resistant to change, it isn’t always the person who is the most enthusiastic about change. The people most resistant could become the most enthusiastic about change once their needs and concerns are being met. What kind of culture in your team or organization do you have. Who are your informal influencers?

Align objectives: AI solves their problems

So at this point you have your audience, you have some inertia, and you know from first principles that AI is actually a good fit for the problems people are having.

Does AI solve their problems? If it does, this is where you can get alignment: Your problems/needs/concerns or motivations align with what we want to achieve with AI. Alignment is your buy-in, but if you are still seeing resistance, it could be coming from a lack of education.

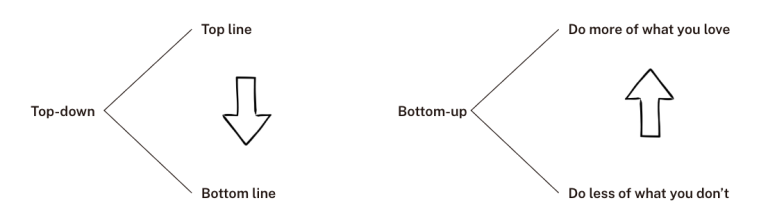

Some companies I have talked to think a lot at the top-end of the spectrum:

- How can AI increase my top of the line? (more revenue)

- How can AI decrease my bottom of the line? (less costs)

But for the people in your organization, they are focused more on:

- How can AI help me do more of the things I want to be doing?

- How can AI help me do less of the things I don’t want to be doing?

Focusing on the value that AI brings people, in the terms that they are thinking of, is really helpful to get buy-in and adoption.

AI Education greatly reduces resistance

I think this is always true, but with AI the problems are even more emphasized. There are a lot of potential areas of resistance, so let’s first identify the majority of the resistance types you will see:

- People fear losing their job

- AI creates insecurity

- AI is a threat to their sense of identity and self-worth

- Loss of Control/Autonomy

- Privacy & Surveillance Concerns

- Quality & Reliability Fears

- Ethical & Moral Concerns

- Overwhelm & Cognitive Load

- Workflow Disruption

- Trust & Transparency Issues

To name a few… this is a lot! However all hope is not lost since a lot of these concerns can be addressed through education.

Studies have shown* that the people who understand AI the most, are the least worried about their job security. And by this I am not referring to AI engineers who build it, just every-day-people who are doing their regular job, integrating or understanding AI makes them less worried about job security, inadequacy, overwhelm and potentially disrupted. They start to see its potential to help them, rather than an understood threat to their existence.

* Sources: (isst.ac) (science direct) (mbs.edu) (frontiers)

Learning about what AI is and how it works doesn’t just show you what AI can do, it shows you what it can’t. This is vital for several reasons:

- Knowing its limitations empowers you

- You are less likely to use AI where it will fail

- You know where to apply it effectively

- It fosters value and self-worth

- AI becomes a tool you know where to use, rather than a threat

If your team is also harrowing with other concerns like energy, ethical use: then you will want to incorporate education and policies around this too. For example this article from Google shows that the energy usage they are modeling is far lower than previously estimated. However diving deeper into this topic will have to wait for another article since AI ethics and morality is a very deep subject with a lot to unpack.

If ethical morality of your AI practice is thee primary barrier to adoption, and that ethical morality is also core to your business values, then I would suggest getting your cave gear up and ready for some deeper spelunking.

Privacy and surveillance concerns are best addressed through AI policies around governance, usage and technological solutions. You can secure your AI infrastructure for example with an internal model, built on your own on or off premise infrastructure. Or through proper assessment of your providers AI policies related to your model and data usage. For some companies it might involve “dehydrating” and “re-hydrating” any PII (personal identifiable information) before it goes off and returns from your model for processing.

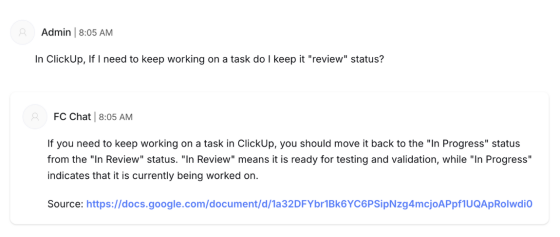

Trust and transparency issues can be addressed through integration into the solution: make sure people stay involved to control quality, build in human and AI feedback systems, don’t put AI systems into production without AI-testing.

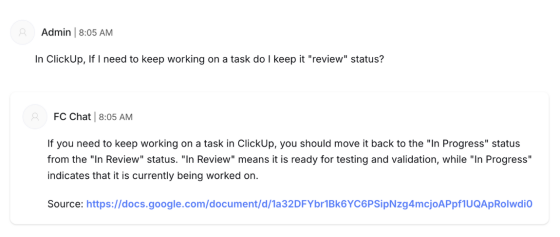

Structuring trust and transparency into your solution can also really help: for example you may decide from your conversations with the team and how important this is that you make it a mandate that your AI system needs to include a source for all of its output that a person [or another AI system] can check and validate. This source could be combined with all outputs.

Foster environments for safe experimentation

Another method that works is giving people the space to explore, ask questions and build experience with AI in a safe container. These experimentation capsules can generate project ideas themselves and lead to a lot of empowerment and confidence. It is a different form of education: getting your hands dirty and trying things out.

For example in one organization I have seen one person experiment with installing local AI models and training them, and then sharing what is possible once they train their local-AI on valuable data.

Sharing this sort of insights then with your co-workers generates a lot of confidence, new skills and new ideas.

Some structure here is still important:

- Make it clear what the goal and intent is of the experimentations

- Make sure you have your governance in place to ensure company data is not being shared with untrusted platforms/companies

- Make sure your experimentations don’t become policy directly without a plan

- Don’t make it a substitute for the listening, vision, leadership and engaging.

On its own, you will still get major issues, experimentation is a good tool to use to help support the rest of change management. Experimentation does create some risks and costs, for example you risk an experiment being adopted before properly being evaluated, or a clear ROI shown. Or you could get a tool that is widely adopted but wasn’t properly assessed and then 6 months later you need to replace it with something else, which can be very disruptive.

So I would say add this step in the process where teams want to space to learn and experiment, and then set the constraints. Ideas that come out of it and examples that are generated need to be done safely and still assessed in terms of the overall company’s timeline and approaches.

If done right, experimentation is really valuable though, especially if your company has a vision of becoming an AI-first company, where everyone is pro-AI and sees it as the latest skill and tool-kit to master in their quest for learning and innovation.

Integrate rather than Disrupt

Next it is time to consider your roll out. Focusing here on integration rather than disruption is much more likely to be successful. If you reduce grindy-churn, you may be able to roll something out right away, otherwise you may need to consider parallel implementation where people get to use the new system optionally rather than required.

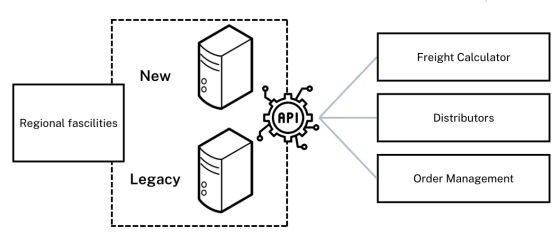

Microservices, a second technical approach to building out solutions, allows you to release new features incrementally and to swap-out aspects of a larger process with new ones as you implement and release each feature.

The core benefit of a microservice is you release contained or input-output optimized features in such a way that they can be added and removed incrementally. By integrating this approach with your AI-initiatives you make it easier to incrementally release change, or to swap in and out various services for different people or at different times as they are improved.

Feedback loops (AI and people)

You’ve got a system up, people are not feeling disrupted by it, they feel heard… you’re almost there! Next we want to know what they are thinking, how it is working for them and build in that self-improvement loop.

If you are building an AI system with a user interface, it can’t hurt to engage a UI/UX specialist who can do UX research to gather insights from people directly in an internal user testing phase.

What do people like? What do they wish it did? Will they use it? Are they excited about what is built or is being built?

If we are evaluating the output:

- Is it meeting expectations (80% accurate? 99% accurate? 99.999% accurate?)

- How can the system be improved?

- What could or should it also be doing?

Integrating human feedback will reinforce and close the loop by making people a part of the solution, you get deep buy in while also improving the very thing you are making. It really is a win-win.

What we need to not also forget about here though is the actual iterative learning of the AI system itself. AI offers a new kind of self-learning that didn’t previously exist.

Sure old systems could have a human making notes about how a software program could be better, and perhaps you could code something where log errors would help signal a change in the code itself… but really nothing like self reflection.

You can now, and absolutely should be now including AI self-reflection in your system model designs.

If you tie an AI system to metrics related to its performance, and it is not hitting targets, at a minimum give AI the task of analyzing why it thinks this is, and how it could improve its performance to do better the next time.

Implementing this effectively is an entirely new topic, and goes well beyond human-change management. So I will leave this topic here as a stub for further deep sea diving. But as it relates to change management and the feedback loop, it is just something you don’t want to forget.

have people give feedback on how systems can be improved to improve their quality of life, their job performance, satisfaction and productivity.

And have people also tied into a feedback cycle that helps the AI systems also improve, so that the AI systems generate and support human activity to help them achieve a higher quality of life, greater output, satisfaction and productivity.

Wrapping it up

In this time of rapid AI adoption—with all the anxiety it creates about jobs, skills, and relevance—the human element has never been more critical to getting return on our AI investments. The companies that succeed will be the ones that invest in both the technological and human elements of the equation. Back in 1982, Blumberg & Pringle proposed a simple formula that still holds true:

Performance = Ability × Motivation × Opportunity

In the context of AI implementation:

- Ability is your measure of the AI’s effectiveness and impact

- Opportunity is the time and resources freed up by AI systems

- Motivation is where it all comes together—or falls apart

With zero motivation, your entire formula collapses to zero. No matter how powerful your AI, no matter how much opportunity it creates.

Change management is fundamentally about building and sustaining that motivation. Therefore I believe that these 10 areas aren’t nice-to-haves. They’re the difference between an AI system that transforms your business and one that quietly gets abandoned six months after launch.