Assessing Your Organization’s AI Readiness: A Framework for Mid-Size to Enterprise Companies

Most mid-size and enterprise organizations know they need to do something with AI. Knowing where your company sits in its AI journey will help you inform your next steps.

We’re seeing companies at wildly different stages of AI maturity. Some are still building internal understanding and haven’t allocated budget yet. Others have AI running in multiple departments with dedicated leadership and roadmaps. Strategy, culture, data, and governance working together create this gap between organizations.

Understanding where you are on the AI maturity curve helps you avoid two common mistakes: trying to run before you can walk, or moving so cautiously that you fall behind competitors who are learning faster.

Want to watch a video about this topic instead?

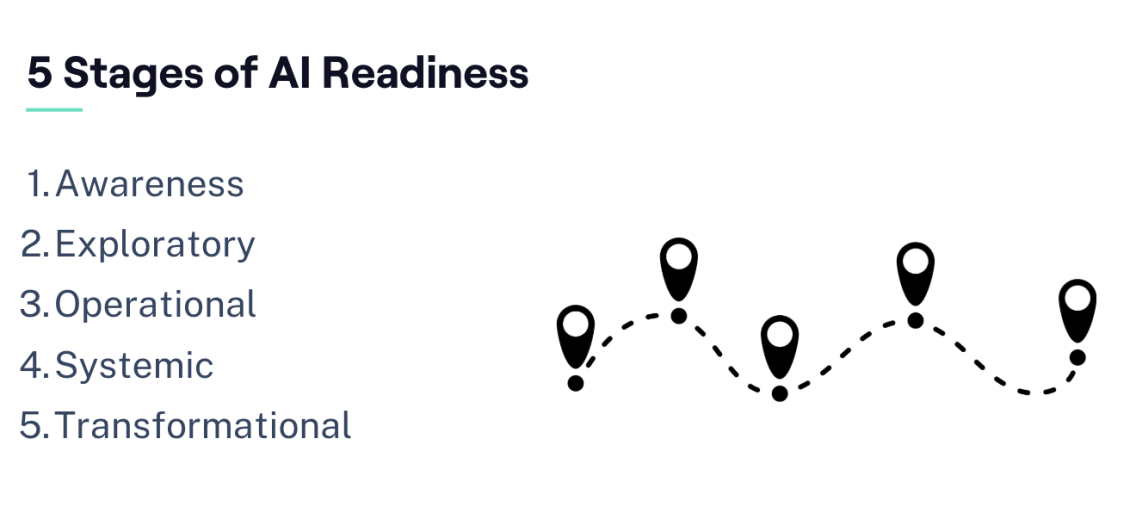

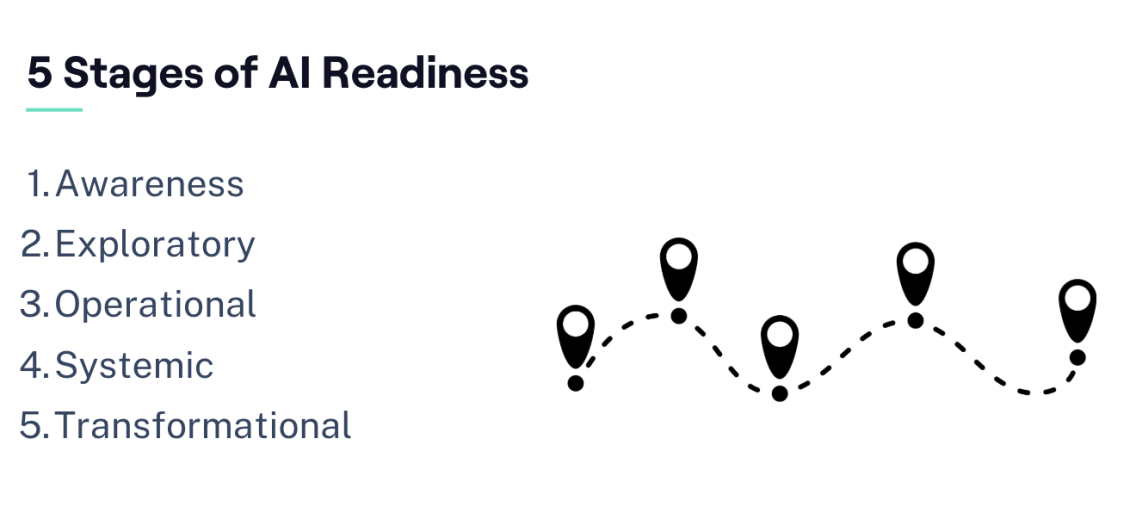

The Five Stages of AI Readiness

Several established frameworks exist for evaluating AI maturity. Gartner’s five-stage model provides a useful high-level view, though McKinsey and Forrester have developed similar approaches. While the specifics vary, they generally identify these progression stages:

Stage 1: Awareness

Your organization recognizes that AI matters and you’re gathering information. There’s no dedicated AI budget yet, no department responsible for AI initiatives, and no formal roadmap. The focus here is building understanding, developing strategy, and generating organizational will to actually implement AI.

This stage focuses on building the internal case for why AI matters to your specific business and what you’re willing to commit to making it work.

Stage 2: Exploratory

At this stage, you’re running experiments and pilot projects. Teams are testing AI tools to build confidence and understand potential applications. This experimentation creates positive momentum. People start seeing what’s possible and develop interest in expanding AI use.

The exploratory stage serves an important purpose: it builds organizational confidence and trust. However, it shouldn’t become your foundation for moving forward. Experiments demonstrate potential, but they don’t create the systematic capabilities you need for real AI integration.

Stage 3: Operational

AI is running within your organization in at least one area. A specific project, product, or service has been institutionalized with AI capabilities. To fully reach this stage, you’ve also established governance: policies around how AI should and shouldn’t be used, data security requirements, and guidelines for safe implementation.

This stage marks the shift from “playing with AI” to “running our business with AI” in at least one meaningful way.

Stage 4: Systematized

The operational stage expands across multiple departments and functions. Different teams are using AI for various purposes. You likely have an AI leader or dedicated team managing implementation, a formal roadmap for expansion, and budget allocated specifically for AI initiatives.

AI becomes part of how multiple parts of your organization operate, with coordination and strategy connecting these various implementations.

Stage 5: Transformational

At the transformational stage, AI is woven throughout your operations. The distinction between “AI parts” and “non-AI parts” of your business largely disappears.

Some companies reach this stage by starting with AI-powered products and services for customers. Others begin with internal operations and cost reduction before moving to new revenue streams. The path varies, but the outcome is an organization where AI capabilities are fundamental to how you operate and compete.

Not every organization needs to reach transformational stage. If you’re operating systematically at stage four with AI integrated across key functions, you’re already well ahead of most companies. The right target depends on your industry, products and services offered, competitive environment, and strategic goals.

The Seven Domains of AI Maturity

You’re only as strong as your weakest link, and this applies quite strongly to AI maturity. An organization might have excellent technical capabilities but poor governance, or strong leadership commitment with inadequate data infrastructure.

These seven domains reveal where your organization needs attention:

Domain 1: Strategy

Your AI strategy should align with clear business goals. Effective strategy means understanding specifically how AI helps you compete, serve customers better, or operate more efficiently.

Mature AI strategy includes:

- Explicit connection between AI initiatives and business objectives

- Clear thinking about your road map and ultimate outcomes

- Understanding of how AI capabilities affect your competitive position

- Concrete, tangible plans rather than vague aspirations

The strategy component answers: Where are we going with AI, and why does it matter to our business?

Domain 2: Value

Are you actually tracking the value your AI initiatives generate? This could be time savings, cost reduction, or revenue impact. It needs to be measured.

For internal AI implementations, value might show up as hours saved per week or reduced operational costs. For customer-facing AI, you’re looking at how it improves your products or services in ways customers care about.

Organizations with high value maturity systematically track whether AI is working and use that feedback to improve or adjust direction.

Domain 3: Governance

Governance covers your policies, oversight, and compliance around AI usage. At the lowest level, some organizations simply prohibit AI use entirely until they develop policies. As maturity increases, governance becomes more sophisticated: clear guidelines about what’s allowed, what isn’t, and how to use AI safely.

Key governance questions:

- Do you have formal policies about AI usage?

- How do you ensure employees don’t share proprietary information with public AI systems?

- What oversight exists to monitor AI usage and ensure compliance?

- How do you handle data security and privacy in AI implementations?

Strong governance means clear guidelines that let people move confidently without restrictive rules that slow everything down.

Domain 4: Engineering

This domain assesses the technical maturity of your AI capabilities. That could be internal engineering teams or external partners you work with, but either way: how sophisticated is your technical implementation?

Engineering maturity includes:

- Team capabilities in AI development, deployment, and maintenance

- Technical infrastructure and development processes

- If you’re doing machine learning or custom model training, how mature are those capabilities?

- Monitoring and maintenance systems to keep AI implementations running well and improving over time

You can’t just build AI systems once and forget about them. Technical maturity includes having the right tools, methods, and processes to maintain and continuously improve your AI implementations.

Domain 5: Data

AI runs on data. This domain evaluates:

- Do you have the data you need for AI implementations?

- What’s the quality of that data?

- How are you organizing, capturing, synthesizing and retaining data?

- If you’re using data to train AI systems, how are you managing and improving that training data over time?

Data maturity often becomes the limiting factor for AI initiatives. You might have excellent technical teams and clear strategy, but if your data is fragmented, low quality, or inaccessible, AI implementations struggle.

Domain 6: Operating Model

Operating model addresses organizational structure around AI:

- Do you have centralized AI leadership or is it distributed across departments?

- Is there a center of excellence or dedicated AI director?

- Does that leadership have actual budget they can allocate?

- How do AI decisions get made and AI initiatives get prioritized?

Having clear structure matters so AI initiatives don’t depend on random individual efforts. There’s no single “right” organizational structure.

Domain 7: Culture & People

Culture and people maturity covers change management territory:

- How are you developing AI capabilities in your existing team?

- What’s the organizational mindset around AI? Is it seen as urgent and important?

- Do you have strong leadership support for AI initiatives?

- Are you successfully managing the human side of AI adoption?

This domain often determines whether technically sound AI implementations actually get adopted and used. You can build perfect systems that fail because the culture wasn’t ready or the people weren’t brought along properly.

(Want to go deeper into Change Management for AI? Check out my previous blog post and video on the subject)

Common Challenges at Different Maturity Levels

The barriers you face depend on where you are in the AI maturity journey.

Lower Maturity Challenges

Organizations early in their AI journey typically struggle with:

Finding the right applications: Where should we actually use AI? What problems are good candidates for AI solutions?

Data availability: We don’t have data, so how can we do anything with AI? (This often stops organizations before they realize there are ways to address the data problem.)

Knowledge and literacy gaps: Understanding what AI can and cannot do, and building enough internal expertise to make informed decisions.

Budget and resources: Getting organizational commitment to actually fund AI initiatives.

These challenges are fundamentally about building capability and confidence. They’re real barriers, but they’re also solvable through education, small wins, and strategic planning.

Higher Maturity Challenges

Once you’ve got AI running in your organization, the challenges shift:

Security concerns: How do we keep our data secure? How do we ensure AI implementations don’t create new vulnerabilities?

Data quality and pattern recognition: We have data, but it’s messy. How do we clean it up and extract useful patterns?

Integration complexity: We have AI systems running in different areas, but they don’t talk to each other. How do we integrate these capabilities?

Scaling challenges: We’ve proven AI works in one department. How do we expand this across the organization without rebuilding from scratch each time?

Bias and ethics: As AI systems become more significant to our operations, how do we ensure they’re fair, ethical, and aligned with our values?

These challenges reflect growing sophistication. They’re harder to solve than early-stage problems, but they emerge only after you’ve achieved real AI capability.

How to Assess Your Organization’s Readiness

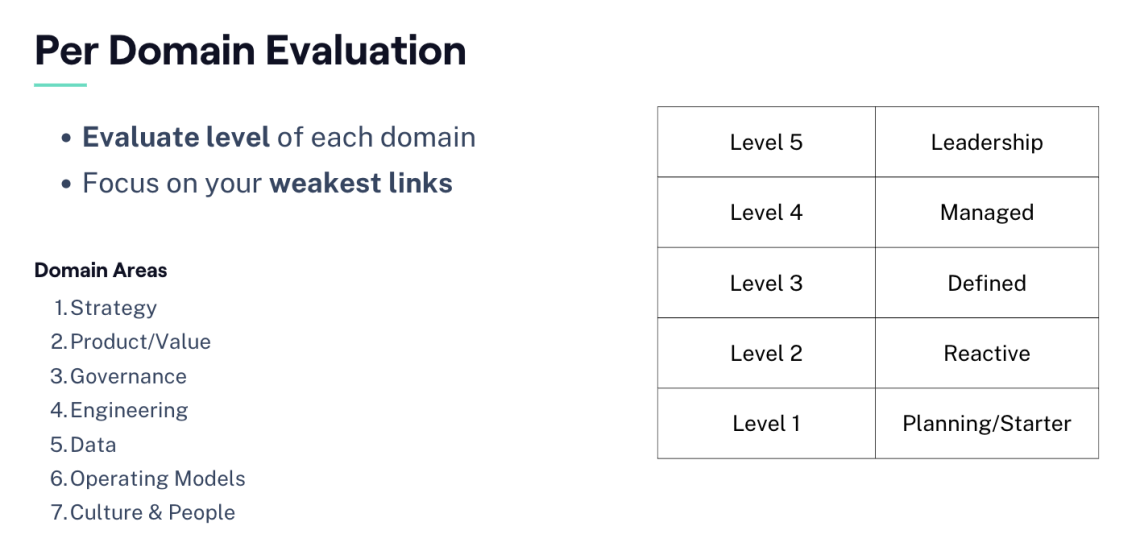

Evaluating your AI maturity across these seven domains gives you a clear picture of where to focus attention:

For each domain, assign a maturity level from 1-5 based on your current capabilities. Where you score lowest indicates your highest-priority areas for development.

Evaluation Approaches

Structured interviews: Talk with leaders across your organization about their understanding of AI capabilities, current initiatives, and challenges. These conversations reveal both explicit capabilities and gaps in knowledge or strategy.

Questionnaires: Develop specific questions for each domain. For data maturity: How accessible is our customer data? How clean is it? For governance: Do we have written policies about AI usage? For culture: How do people react when AI initiatives are proposed?

Evidence collection: Look at actual capabilities, not just aspirations. Do you have people successfully running AI projects? Examine their skill levels. Review your data infrastructure. Is data actually organized and accessible? Count the AI initiatives that have moved from experiment to production.

External research: Use AI tools to help you evaluate where you stand. GPT-4, Claude, or other AI systems can help you think through assessment questions and benchmark against industry standards.

Understanding where your weakest links are helps you address them strategically. Perfect scores across all domains aren’t the goal.

Making Sense of Your Assessment

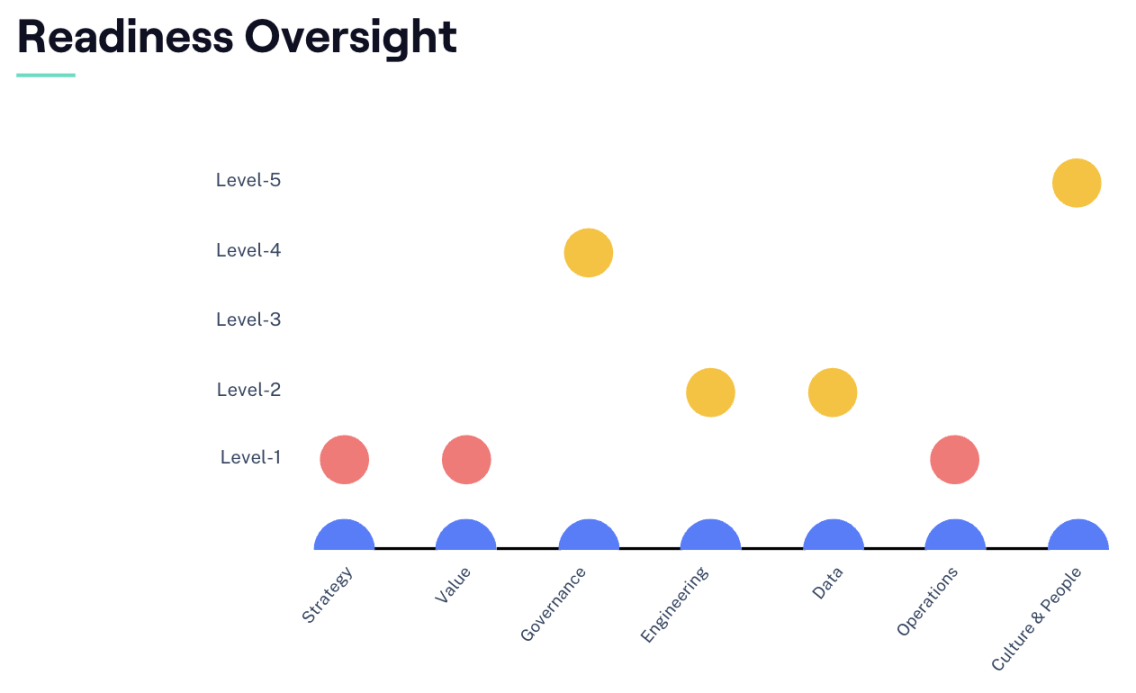

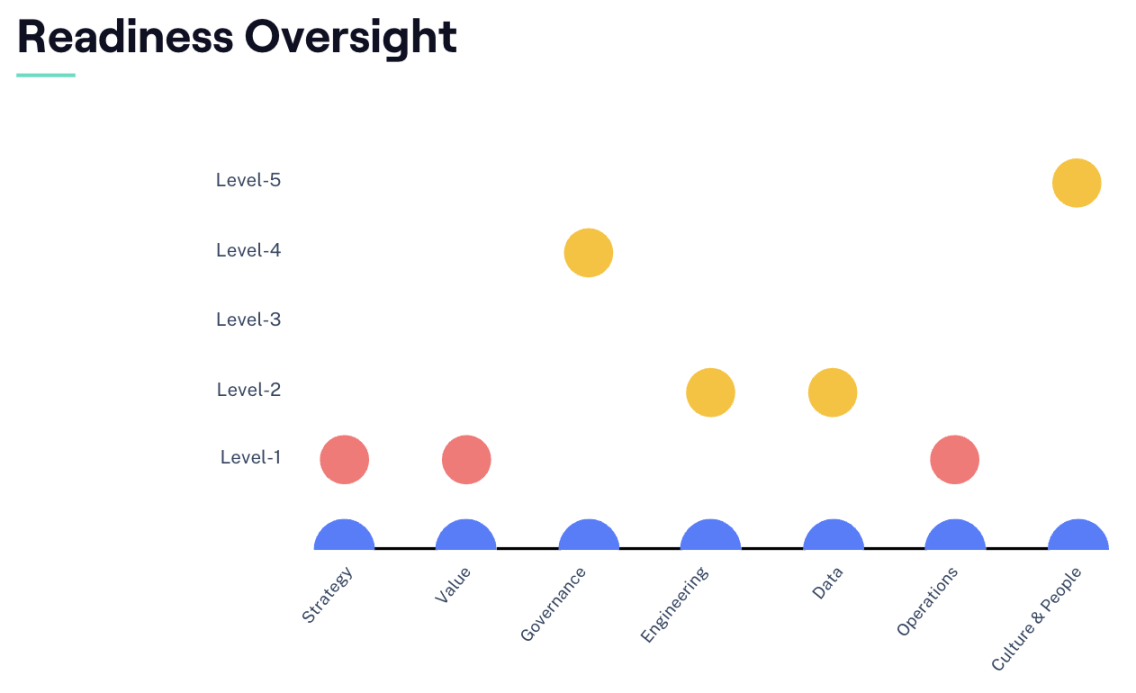

Once you’ve evaluated your organization across the seven domains, you can visualize where you stand. The lowest-scored domain becomes your highest priority because it’s limiting your ability to progress.

Consider an example organization that scores:

- Strategy: Level 1

- Value: Level 1

- Governance: Level 4

- Engineering: Level 2

- Data: Level 2

- Operations: Level 1

- Culture & People: Level 5

This pattern tells a clear story: The organization has people who are enthusiastic about AI (high culture score). There’s some data and technical capability from experiments or small projects. Leadership has taken governance seriously and developed strong policies early.

Strategy and value are at level one. There’s no clear direction on why AI matters to the business or how value will be measured. Operations is also at level one, implying there’s no dedicated leadership, budget, or organizational structure to drive AI forward.

This organization needs to focus on getting leadership aligned on AI strategy before anything else. The technical capabilities and enthusiasm exist, but without strategic direction, a clear ROI target, and organizational commitment (budget, leadership, structure), AI initiatives will remain scattered experiments that don’t create lasting value.

What Assessment Reveals About Next Steps

Understanding your AI maturity profile determines what you should tackle next.

If your strategy and value domains score low but everything else is moderate to high: You need leadership engagement and clear strategic direction. The organization has capability, but it’s not being directed toward meaningful business outcomes.

If data scores low while technical and strategic maturity are higher: You need to address data infrastructure, quality, and accessibility before ambitious AI projects will succeed. This might mean data engineering investments or developing better data capture processes.

If governance scores low while initiatives are already running: You’re creating risk. Slow down on new projects and establish policies, security practices, and oversight before expanding further.

If culture and people score low despite strong technical capability: You’ve got a change management challenge. Focus on education, involvement, and helping people understand how AI benefits them before pushing more implementations.

The seven-domain assessment helps you avoid the trap of assuming your problem is purely technical. Often strategy, governance, organizational structure, or culture become the limiting factors rather than engineering capability.

Moving Forward

AI readiness assessment is an ongoing evaluation as your capabilities mature and challenges evolve. What limits you at stage two (data availability, knowledge gaps) is completely different from what limits you at stage four (integration complexity, scaling challenges).

The companies succeeding with AI are the ones who accurately assess where they are, identify their limiting factors, and systematically address them.

Did you like this article? Wish to learn more? You can:

- Research readiness models (Gartner, McKinsey, Forrester)

- Read/watch my article on AI Change Management

- Start by assessing your internal organization’s sophistication level across each domain

- Nominate or hire an advisor/consultant to help correctly assess from the outside-in where your organization is and where it needs to put its priorities first